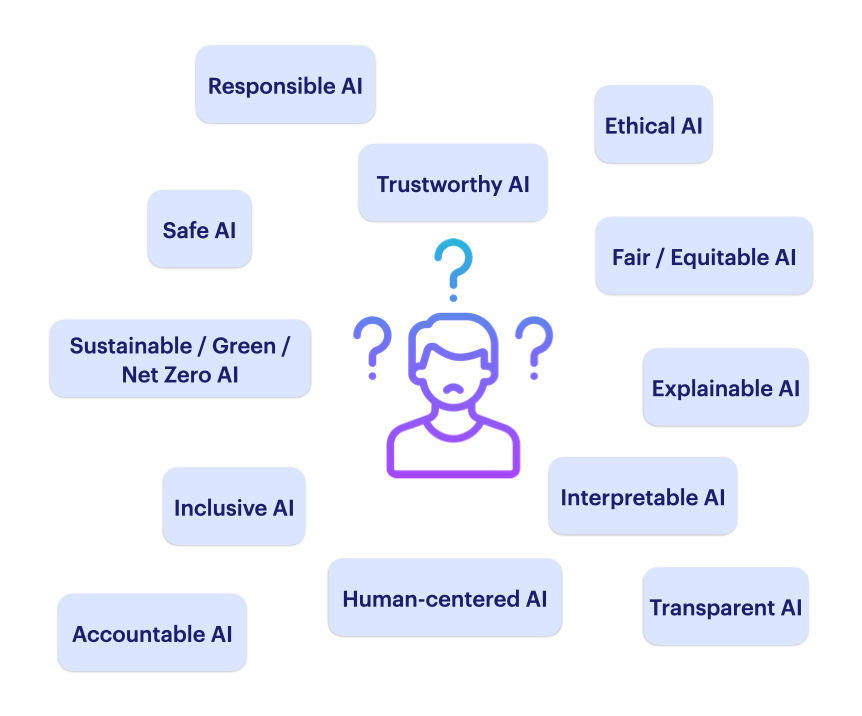

Understanding AI Values: The Meanings, Subtleties & Nuances

With AI advancing every day, new values and principles are being defined to ensure responsible use, respectful of humans, living beings, and the planet as a whole.

Along the way, many terms have emerged. Some are self-explanatory, while others create confusion with their subtle differences in meaning.

This post is meant to unpack the terminology around AI ethics and values, and to clarify the contexts in which each is used. For readers who want to go deeper, resources are provided at the end.

Trustworthy AI:

Is the overall qualification of an AI system. According to the Ethics Guidelines for Trustworthy Artificial Intelligence presented by the High-Level Expert Group on AI, for an AI system to be qualified as trustworthy, it should meet the following 7 requirements :

- Human agency and oversight: AI supports human decision-making and rights, with proper control through human-in-the-loop, on-the-loop, or in-command approaches.

- Technical robustness and safety: The system is safe, resilient, reproducible, and reliable, with fallback plans to prevent unintentional harm (risk management).

- Privacy and data governance: Beyond privacy and protection, AI needs strong data governance: quality, integrity, and legitimate access.

- Transparency: AI must be transparent and traceable, with decisions explained clearly to stakeholders, and users informed they’re interacting with AI, its abilities, and its limits.

- Diversity, non-discrimination and fairness: The AI system avoids bias, promotes diversity, ensures accessibility, and involves stakeholders throughout the AI lifecycle.

- Societal and environmental well-being: AI should be sustainable, eco-friendly, and socially responsible for present and future generations.

- Accountability: Mechanisms must ensure AI accountability through audits of algorithms, processes, and data, as well as accessible ways to address issues or harms.

In summary, these guidelines dictate that trustworthy AI should be robust, lawful, and ethical .

Responsible AI:

Respresents the actions taken to ensure a trustworthy AI system. According to ISO , responsible AI aims to build trustworthy systems that are dependable, fair, and in line with human principles. The ultimate objective is to build and use AI systems that serve society while minimizing harmful outcomes.

Ethical AI:

Emphasizes the moral and human aspects in AI systems. UNESCO’s Recommendation on the Ethics of Artificial Intelligence centers on protecting human rights and dignity, guided by principles like fairness and transparency, with human oversight of AI as a key priority.

According to TechTarget , ethical AI focuses on moral considerations such as fairness, societal well-being, and privacy.

Safe AI:

Focuses on making sure AI systems function reliably and securely while avoiding harm. Safe AI reinforces operating within established safety limits. It emphasizes risk mitigation and protecting users .

Fair / Equitable AI:

Ensures that individuals and data subjects are treated equitably and impartially, preventing unfair outcomes or biases related to race, gender, age, nationality, or religious and political beliefs. The aim is to avoid AI systems worsening existing disparities, particularly for already underprivileged groups.

Fairness is also procedural, meaning all individuals should be able to dispute AI-driven decisions and pursue correction when those decisions are unfair or mistaken .

Inclusive AI:

Involves creating AI systems that account for the diverse needs and experiences of all people, not only the majority . It emphasizes:

- minimizing bias in AI development, deployment, and assessment

- widening access to AI tools and education

- including a variety of perspectives in the design and governance of AI systems.

Accountable AI:

Refers to the principle that AI should be designed, implemented, and used in a way that makes it possible to hold responsible parties accountable for any harmful outcomes .

According to the paper by Novelli et al. , accountability is central to AI governance. Put in terms of answerability, it requires recognizing authority, enabling questioning, and setting limits on power. It can be understood through seven aspects: context, scope, responsible agent, decision forum, standards, processes, and consequences. It serves key governance goals: compliance, reporting, oversight, and enforcement.

According to the European Data Protection Supervisor (EDPS) , transparency, interpretability, and explainability in AI have no official definitions. However, the logical flow of these concepts is presented as follows.

Transparent AI:

Is the capacity of a model to be comprehended . It involves openly communicating a system’s purpose, its role within the organization, its potential benefits and risks, and its expected effects on people. It also implies clarifying the reasoning behind the AI model’s decisions .

A transparent AI system allows accountability by letting stakeholders review and examine its decisions, identify biases or inequities, and confirm that it functions according to ethical guidelines and legal obligations .

Interpretable AI:

Relates to how well humans can make sense of a ‘black box’ model or its decisions . Models with low interpretability are considered opaque, as it is often unclear how or why a specific output is produced from the given inputs .

Interpretable AI models enable humans to anticipate a model’s predictions for a given input and recognize when it produces an error .

Explainable AI (XAI):

Focuses on giving clear, understandable reasons for model outcomes. It seeks to answer the question, ‘Why did the AI make this decision?’. It builds on interpretability while drawing on insights from human-computer interaction, law, and ethics .

Explainability is crucial in high-stakes scenarios, where lives or sensitive data are involved .

Human-Centered AI (HCAI):

Focuses on developing and implementing AI technologies that prioritize human needs, principles, and skills. It aims to augment human capabilities and promote well-being, rather than overriding human roles. It also considers the cultural, social, and ethical impacts of AI, ensuring systems are inclusive, usable, and advantageous for everyone.

HCAI is closely related to Human-AI Interaction, which studies how humans and AI systems communicate and cooperate effectively.

In summary, HCAI is an integrative approach that connects responsibility, fairness, explainability, safety, and inclusivity to ensure AI systems serve human interests .

Sustainable / Green / Net Zero AI:

Is an approach and a movement aimed at aligning AI development with environmental sustainability. It focuses on minimizing the carbon footprint of AI systems.

Unlike conventional methods, Green AI incorporates eco-friendly practices throughout the entire AI lifecycle, from design and development to deployment and ongoing operation .

Conclusion

All these AI values and virtues work hand in hand. While different sources may use overlapping terms, the essence remains the same: the noble message behind them is unchanged.

For AI to truly benefit humanity, it must serve all people equally, respecting their dignity, their resources, and the planet. It must also be predictable and understandable, not only to technical experts but to everyone affected.

When these principles come together, AI can empower people, guide informed decisions, and contribute positively to society and the environment.