Data Auditing: The Foundation of Responsible AI in Medical Imaging

In medical imaging, AI models are often evaluated through performance metrics such as accuracy, sensitivity, and specificity. Yet, a model that performs well in a controlled lab setting may fail once deployed in real clinical environments.

Why? Because an AI system is only as good as the data that shaped it.

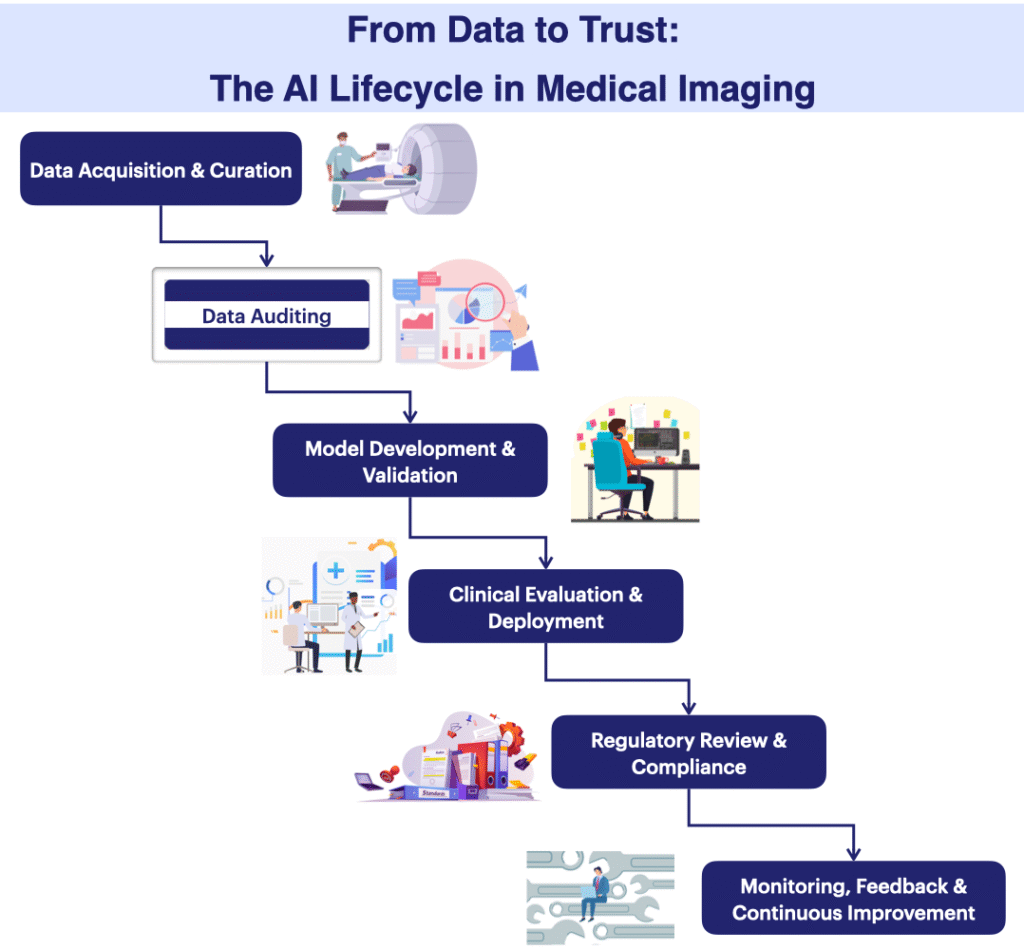

Before training any neural network, before tuning hyperparameters or optimizing inference times, the first responsible step is to audit your data.

A data audit is not a bureaucratic exercise. It is a scientific and ethical necessity.

To understand the values and ethical principles that shape responsible AI, you can read more in our AI values blog post.

What is a data audit in medical AI?

A data audit is a structured review and evaluation of the datasets employed for model development, aiming to assess their quality, integrity, and dependability .

Frameworks such as METRIC illustrate how the field attempts to formalize data quality and governance concerns. In practice, however, effective data auditing in medical imaging relies less on checklist compliance and more on context-specific judgment about data assumptions, limits, and risks.

The dimensions proposed by METRIC fall under five broad categories (Adapted from METRIC, npj Digital Medicine, 2024.):

- ⚙️ Measurement process: conditions that impact the reliability of data gathered, resulting from human or machine behaviors

- ⏱️ Timeliness: Assesses if the dataset is being used at a suitable time relative to its creation or most recent update.

- 🩻 Representativeness: The level of correspondence between the dataset and the specific population (patients) the application targets. This addresses issues such as demographic and source variability, image quality and resolution, population diversity, dataset scale, and class proportionality.

- 💡 Informativeness: examines how well the data communicates useful information. This includes characteristics such as understandability, compactness, non-redundancy, etc.

- 📁 Consistency: includes compliance to rules and regulations, data soundness and rationality (e.g., age=200), dataset uniformity and temporal distribution changes.

Audit Process Overview

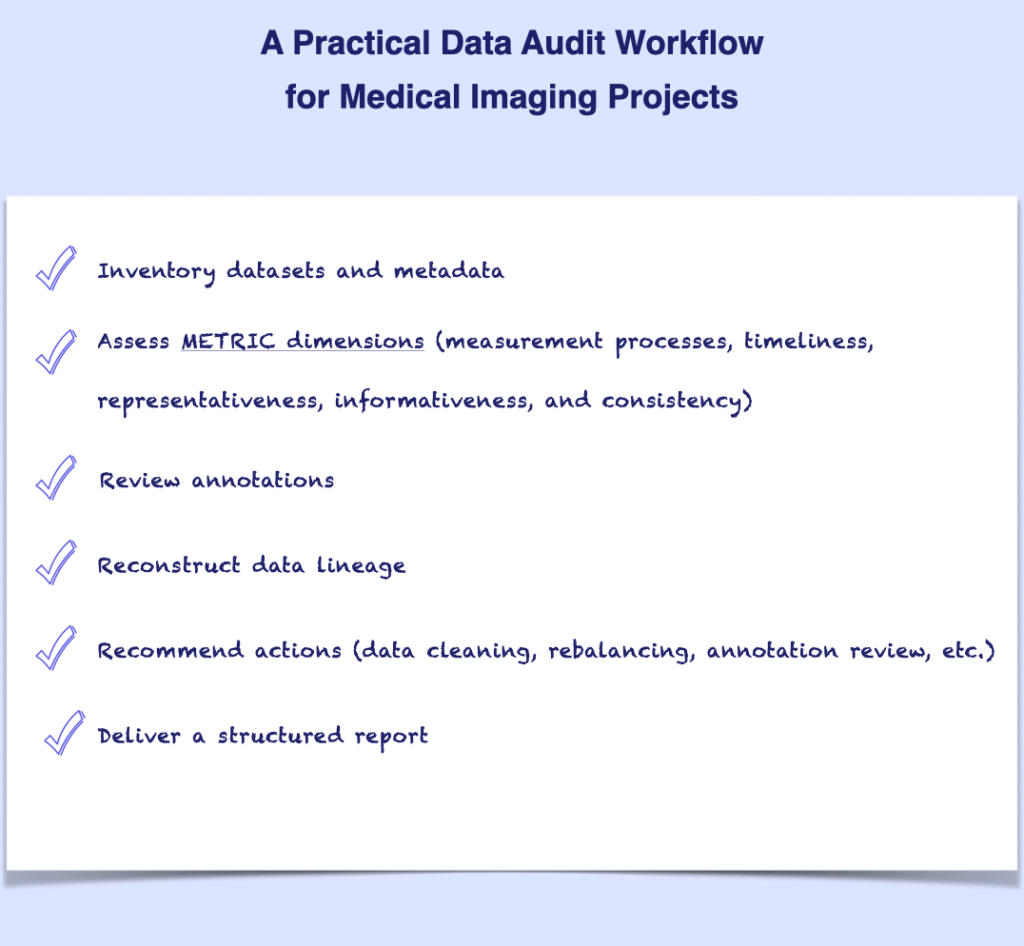

Here’s an example of a practical checklist to guide a responsible data audit in medical imaging projects:

In practice, each of these steps requires careful adaptation to the clinical context and data characteristics. This framework serves as a starting point or an example for structured auditing.

Why data auditing matters?

Thorough data auditing uncovers both the strengths and the weaknesses of the datasets at hand. It enables responsible AI by reinforcing transparency, improving reliability, building trust, and ensuring compliance.

This includes:

- Reinforcing responsible AI: Answering questions concerning transparency, explainability, fairness, inclusiveness, etc.

- Ensuring clinical reliability and robustness: A strong internal validation does not guarantee high performance on external data. Data auditing helps identify the causes behind lower external performances: class imbalance, biases, errors, degrees of non-uniformity, etc.

- Building clinical trust: Radiologists and clinicians often ask, “What exactly did the model learn from?”. A clear audit trail builds trust and helps communicate the model’s strengths and limitations in a transparent way.

- Supporting regulatory compliance: Regulatory bodies in the medical field expect AI developers to demonstrate data governance and provenance, bias assessment, transparency, human oversight, robustness, and traceability .

As a result, it becomes clear that data auditing can significantly strengthen a project’s strategic thinking. It does so by:

- demonstrating governance, leadership, and accountability: key pillars of responsible AI.

- preventing months of wasted development caused by flawed data.

- improving the AI system’s credibility for investors and partners.

- reducing regulatory risk and accelerating compliance readiness.

Discussion and Conclusion

Responsible AI begins long before the first line of code. Most AI projects rush into modeling, yet auditing is where true project maturity begins.

A data audit is not optional. It is both an ethical obligation and a scientific best practice. By deeply understanding your data – its origin, diversity, and limitations – you lay the groundwork for AI models that are fair, reliable, transparent, explainable, and clinically meaningful.